The weekend update HLS fMP4 and a new uploader

The player

We’ve changed the layout of the player to hopefully make it even easier to use. The seek bar has been widened and moved back to the centre, rather than being on its own row.

For those users who want to adjust the colour, you can change the background of the main play button and also the control bar.

The player now incorporates additional layers of error-handling to reduce the chance of a failed playback. Failed attempts to load various components - like the manifest - will now result in it retrying. Temporary faults should be automatically recovered from. For example if there is a networking issue (such as with your WiFi connection) meaning a fragment can not load, the player will attempt to do so again after a short delay.

We’ve also changed the logic the player uses when loading segments. Now when the player loads it downloads only the first one or two segments. That means they are ready when the viewer clicks play but minimises the amount of bandwidth wasted if they don’t. If they do click play then the player attempts to apply your chosen ‘buffering goal’ (in seconds). For example you may want the player to attempt to buffer 30 seconds ahead of where the viewer currently is. There is a trade-off between performance and data since a larger buffer reduces the risk of a stall, but means that data is downloaded unnecessarily if the viewer does not reach that point in the video.

Transcoding

We have changed our packaging and now use HLS fMP4 (fragmented MP4). You may remember we explored this over a year ago (https://www.vidbeo.com/blog/dash-hls-and-hls-fmp4) once Apple announced support at WWDC2016:

Previously you had to use MPEG-2 Transport Stream (.ts segments).

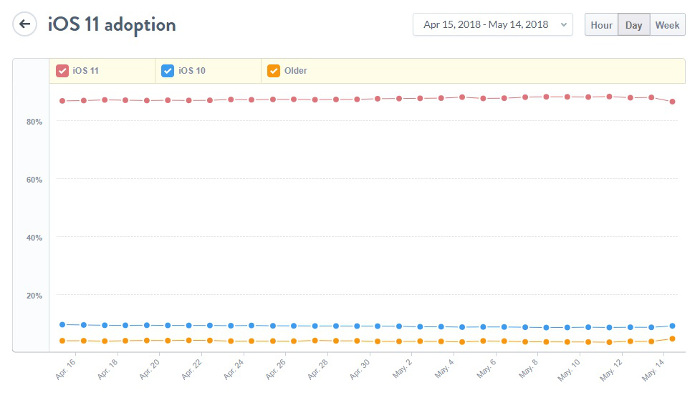

Since it was still new, there remained issues with it. iOS 10+ adoption had not quite reached sufficient levels and the new fMP4 version is only supported in iOS 10 onwards. Since the primary reason for using HLS at all is iOS - since it does not support the most popular alternative, DASH - it was key that it was widely supported. Now it is, especially since a newer version of iOS has been released since, version 11:

Source: https://mixpanel.com/trends/#report/ios\_11/from\_date:-29,report\_unit:day,to\_date:0.

Using MP4 fragments has several benefits. It means we can use the same segments for DASH if we enable that too. Otherwise the segments are duplicated (MP2S for HLS, MP4 for DASH … double the storage, double the cost, and greater chance of a CDN cache miss, resulting in a slower pull from the origin). More importantly, because of the way the files are structured, you get a higher network efficiency at lower bitrates (Apple discuss this in their WWDC2016 presentation introducing it).

Using HLS fMP4 also opens the door to a common encryption across the major browsers. This is normally done using MPEG-CENC (‘Common Encryption’). That works for Widevine and for PlayReady, the two DRM systems owned by Google and Microsoft respectively. Apple have their own DRM encryption, called FairPlay. FairPlay therefore now also supports CENC. However currently FairPlay requires ‘cbcs’ mode for CENC. But Google’s browser, Chrome, does not support playing that. They are adding support in the latest version of Android, however again we run into the issue of a lack of adoption meaning we can not rely on a user owning a supported device. So this support for CENC is a benefit for the future, rather than being used now.

Another part of the transcoding process that has changed in this update is that now only clients with custom encoding profiles need be concerned about picking one. For most users, our standard set of renditions (usually ranging from a poor quality SD for slow connections, up to a high quality HD for fast connections) are perfectly fine. That all happens automatically. For those users who have a specific requirement for a custom set of renditions (for example if you know your viewers will be on slow connections, you might want to avoid wasting time generating - and storing - a HD copy of each video and focus on the lower bitrates) we can set up a custom profile. Once done, that will become available to select from a dropdown menu the next time you upload a video.

Internally the structure of that encoding profile has changed too to make future support for DRM simpler.

The control panel

We recognise that uploading a file can be problematic for users with poor connections. If you are uploading a 1GB file and your connection drops at 90%, that is very frustrating. Therefore we have changed how resumable uploading works to try to solve that. When you upload a file, it is now internally divided into segments and uploaded in separate parts, one after another. They are then recombined seamlessly at the other end. The advantage of this approach is that if your connection were to be lost, only the segment being uploaded at that point would be lost - and so the upload could pick up from that point when you try it again. So let’s say your video was 100MB and the segments were 5MB in size. If the connection fails around 50%, then at that point you’ve already uploaded around 50MB of data. So if you retry uploading that same file shortly after, the system recognises that 50MB has already been uploaded. So it resumes from that point, from the 50MB-55MB piece. If this happens successfully you should see the progress bar skip forward rapidly to catch up with where it left off.

Like most sites, we sign users out automatically after a while. To further help avoid failed uploads, that session-time has been greatly increased.

This update also fixed an occasional bug rendering the storage graph, if you have access to that data.

We’ve now added an iframe delegation policy to our embed code. This is currently not required on many browsers, however Chrome - the most popular browser - has added support for it and encourages its use. You will see our iframe embed code now includes a allow=“fullscreen” attribute. You are of course free to remove that, or add your own (such as autoplay).

The iframe delegation feature policy appears to be a way to control what the iframe can do and replaces the separate attributes, such as allowfullscreen. You can read more about it here in the wider context of auto-playing content: https://developers.google.com/web/updates/2017/09/autoplay-policy-changes.

We generally don’t recommend setting your content to auto-play due to complexities around it and have deprecated support for triggering it in the URL via a query string. It is often blocked by the browser to prevent adverts exploiting it. When it is used, you will often see this error:

play() failed because the user didn’t interact with the document first

Speed

New CDN edge PoPs have been enabled in Asia, in Singapore and Bangalore. Content cached there will therefore be served faster to your viewers in South-East Asia.

A ‘hop’ has been removed from our image-serving process. They now go from the origin to the resizer (needed to return the image at the size it is embedded to avoid wasting bandwidth) to the CDN edge location. And the resizer component can be skipped entirely if there is a copy in the cache from a previous viewer.

As mentioned above, HLS fMP4 (automatically used for content going forward) is generally more efficient than MPEG-2 Transport Stream. While the difference is small - a few percentage points - that can add up when the amount of data transferred is large. We can serve a video that is the same quality at a slightly smaller size. Therefore it downloads that bit quicker.

We have added a longer cache header to our transcoded assets, so they should stay cached at the CDN edge and at the viewer’s browser (for a second viewing) for longer.

If you have any problems with any of these updates, or are interested in finding out more about our enterprise video hosting, please email us at [email protected] and we’ll be able to assist you. You can start a free seven-day trial.

Updated: May 14, 2018