Subtitles and closed captions in HLS using WebVTT

HLS (HTTP Live Streaming) can be quite confusing, particularly when it comes to using subtitles or closed captions. Since we use HLS extensively to distribute your video content (despite its name, it is widely used for video on-demand too) we have tried to explain how it works.

What’s the difference between subtitles and captions?

Closed captions and subtitles are for different purposes.

Subtitles assume the viewer can hear the audio but needs a text alternative to the spoken dialogue. An example would be translating the dialogue into a different language.

Closed captions assume the viewer can’t hear the audio. As such, the captions also describe the soundtrack such as background noise, telephones ringing, and other audio cues.

If you use an Apple device, you may have seen the letters SDH. That stands for “subtitles for the deaf and hard of hearing”. So subtitles are treated as captions.

Does HLS support subtitles or captions?

Yes. In Apple’s HLS specification it supports both subtitles and closed captions. Apple recommends including them to improve accessibility. The supported formats are CEA-608, CEA-708, WebVTT and IMSC1.

But note the HLS specification says:

“Closed captions (if any) MUST be included in the video Media Segments”

That’s important and so makes closed captions more complicated to manage. Since the captions are intertwined with the video segments. The captions need to be available at the point the video is uploaded. If not, the video content has to be re-packaged each time closed captions are added or changed. And so that incurs extra costs in storage, compute, and time.

Using subtitles in HLS is therefore more flexible as they can be served using a separate file. And so managed independently. If you add subtitles later (such as when a translator provides the subtitles to you, in, say, German), that can be added to a HLS manifest without having to touch the media files. That’s much easier too, as a HLS manifest is simply a text file. It means the media files can remain in cache, improving performance.

Subtitles can be treated the same as closed captions (to provide accessibility to those who are hard of hearing) by:

-

adding a CHARACTERISTICS attribute such as CHARACTERISTICS=“public.accessibility.describes-spoken-dialog,public.accessibility.describes-music-and-sound”.

-

setting an AUTOSELECT attribute with a value of “YES”

We’ll go into a bit more detail later on.

What subtitle file formats are there?

The dominant subtitle file formats are TTML (Timed Text Markup Language), SRT (SubRip) and WebVTT (Web Video Text Tracks Format).

The HLS specification mentioned above is clear about which must be used:

“Subtitles MUST be WebVTT (according to the HLS specification) or IMSC1 in fMP4”

IMSC1 is TTML Profiles for Internet Media Subtitles and Captions.

Like many online video platfoms, we use fMP4 for our on-demand videos. And so we use WebVTT for all of our subtitles. WebVTT is a W3C standard, it’s widely supported, and it’s easy to read.

What is WebVTT?

WebVTT is a text format for specifiying when captions or subtitles should be shown. Each file must be encoded using UTF-8.

They will normally have the extension .vtt and have the MIME type of text/vtt.

A WebVTT file should contain a single WEBVTT line, followed by a blank line, and then zero or more blocks of text. Each has a line that tells the client the times during which that text should be shown.

For example:

WEBVTT

00:00:01.000 --> 00:00:04.000

- This is an example

00:00:05.000 --> 00:00:09.000

- of using WebVTT.

In the example above we have used the timecode format of HH:MM:SS:MMM and so the first line would be shown during its accompanying video between 1 second and 4 seconds.

If you would like to find out more about WebVTT there is a good explanation on the Mozilla site: https://developer.mozilla.org/en-US/docs/Web/API/WebVTT_API.

How should the HLS manifest be structured?

An example of a primary HLS manifest containing subtitles is shown below. This video has a single subtitles track, in English:

#EXTM3U

#EXT-X-INDEPENDENT-SEGMENTS

#EXT-X-MEDIA:TYPE=AUDIO,GROUP-ID="audio",NAME="Default",AUTOSELECT=YES,LANGUAGE="en",CHANNELS="2",URI="aac_128k/audio.m3u8"

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,AUTOSELECT=YES,FORCED=NO,LANGUAGE="en",CHARACTERISTICS="public.accessibility.transcribes-spoken-dialog",URI="subtitles_en.m3u8"

#EXT-X-STREAM-INF:BANDWIDTH=629166,AVERAGE-BANDWIDTH=480134,CODECS="avc1.4d4015,mp4a.40.2",RESOLUTION=426x240,FRAME-RATE=24.000,AUDIO="audio",SUBTITLES="subs",VIDEO-RANGE=SDR

h264_240p/video.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=1083378,AVERAGE-BANDWIDTH=733406,CODECS="avc1.4d401e,mp4a.40.2",RESOLUTION=640x360,FRAME-RATE=24.000,AUDIO="audio",SUBTITLES="subs",VIDEO-RANGE=SDR

h264_360p/video.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=2748252,AVERAGE-BANDWIDTH=1488549,CODECS="avc1.64001f,mp4a.40.2",RESOLUTION=1280x720,FRAME-RATE=24.000,AUDIO="audio",SUBTITLES="subs",VIDEO-RANGE=SDR

h264_720p/video.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=5438980,AVERAGE-BANDWIDTH=2868620,CODECS="avc1.640028,mp4a.40.2",RESOLUTION=1920x1080,FRAME-RATE=24.000,AUDIO="audio",SUBTITLES="subs",VIDEO-RANGE=SDR

h264_1080p/video.m3u8

The line we are interested in is the fourth one:

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,AUTOSELECT=YES,FORCED=NO,LANGUAGE="en",CHARACTERISTICS="public.accessibility.transcribes-spoken-dialog",URI="subtitles_en.m3u8"

What attributes can you set?

Some of the attributes within the subtitles line above taken from the HLS manifest are (reasonably) self-explanatory:

- NAME (such as “English”)

- LANGUAGE (such as “en”, for English)

- URI (such as “subtitles.m3u8”).

You may have noticed there are also several optional flags you can set which aren’t so obvious: AUTOSELECT, DEFAULT AND FORCED. Each of these can be set with a YES or NO value. The default is NO and so if you do not include one of these flags, the implicit value is NO.

DEFAULT

Set DEFAULT as YES if these subtitles should be selected if the user hasn’t provided any other information to indicate a different preference.

If you do set DEFAULT as YES, make sure to also set AUTOSELECT as YES.

In our video CMS we don’t currently provide an option to set a default language and so we set DEFAULT as NO. We leave it up to the client to pick.

AUTOSELECT

Set AUTOSELECT as YES if these subtitles may be selected in the absence of an explicit user preference for another one.

It will be listed as available to select by the user. We set AUTOSELECT as YES for all subtitle tracks.

FORCED

Set FORCED as YES if these subtitles are considered essential to play. We set FORCED as NO for all subtitle tracks.

The behaviour of FORCED is inconsistent across devices and browsers (if it’s supported at all). Often tracks which have FORCED set as YES won’t be listed on the user’s language selection menu (that is how Apple devices handle this flag).

If you do set FORCED as YES, you should also set AUTOSELECT as YES.

The FORCED flag should only be included if the TYPE attribute is set as SUBTITLES (hence why it is applicable to our usage).

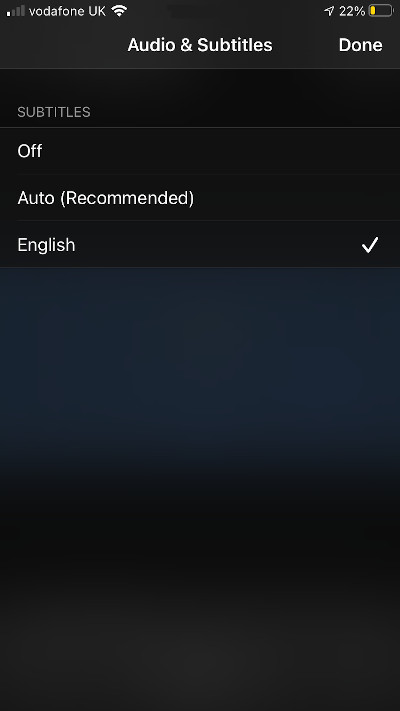

As you can see in the example line above, our video CMS sets the three flags like this: DEFAULT=NO,AUTOSELECT=YES,FORCED=NO. As a result, the subtitles are listed in the menu. On iOS the native menu looks like this:

Why add subtitles or captions?

Both extend your content to a wider audience.

Adding closed captions makes your content accessible to viewers that are hard of hearing.

Adding subtitles makes your content understandable to audiences around the world who may not otherwise understand the audio language.

How do I add subtitles to my video?

When you upload a video to our enterprise video platform, we optimise it to look great on all devices. We do that using HLS adaptive bitrate streaming. It is a format developed by Apple Inc. and since it is now more than a decade old, it is well established. Many devices natively support it (for example in Safari on Mac/iOS or Edge on Windows). When it is not natively supported, it can be made to work by using some additional JavaScript.

The basis of HLS is to split your video files into many small chunks. Each is usually a few seconds in duration. And there is a manifest file which lists the segments.

We make that for you as part of the packaging stage.

Once that is done, you will see a thumbnail image taken from your video appear in your video library. Click on that and then on the Subtitles tab. You should see a button to upload a new subtitles file. As described above, that should be a WebVTT .vtt file.

When you upload that .vtt file, we validate it and then add those subtitles to your video’s primary HLS playlist.

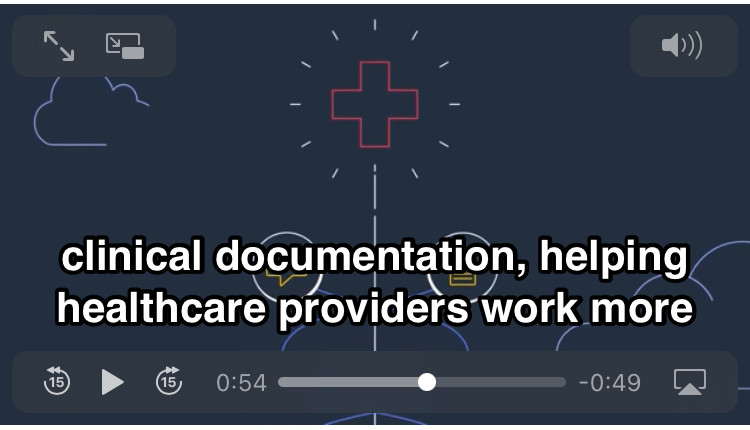

One advantage of that approach is that you only need one URL from us, for the video’s primary manifest. Else you would need to also obtain a URL for each separate subtitles WebVTT file you upload. And that is further complicated when they require authentication, such as using a CDN token which expires. Another advantage is the subtitles are natively supported by the device if it supports HLS. For example the engine that powers our player on desktop will not work on iOS (because iOS does not provide the JavaScript extensions, known as MSE, that are needed). As such we have no opportunity to inject subtitles. We rely on its native HLS playback. Providing subtitles within the primary HLS manifest means they work regardless using its native player, as shown below:

Updated: November 18, 2021